Wow — this sounds like a cliché, but a handful of clever AI moves really can level the playing field in online gambling.

I’ll give you the practical bits first: targeted fraud detection, dynamic bonus personalization, and an adaptive game-recommendation engine that nudges retention without bulldozing the player experience.

These are not theoretical buzzwords; they’re discrete components you can audit, measure, and iterate on, and they’re the reasons some smaller operators now outpace older brands in player trust and speed.

I’ll walk you through how these systems work, concrete KPIs to watch, and the mistakes that trip up most teams, so you leave with a checklist you can actually use.

First up: why AI matters for a nimble operator — and how that sets the scene for tactical advantage over bigger rivals.

Hold on — big brands spend more on marketing, but small casinos often win where agility matters.

A fast AI feedback loop lets a smaller team deploy fraud rules, payment routing, and bonus tweaks in days rather than quarters, which translates into fewer false blocks and faster payouts for real players.

That advantage shows up in two places: reduced friction during cash-outs, and a measurable lift in NPS from players who feel “treated fairly.”

So when a player-facing problem comes up, the small operator can A/B test a fix and push it live the same week; the giants take months to align stakeholders and legacy systems.

Next, we’ll break down the main AI levers a scrappy casino uses to exploit that agility.

Key AI Levers That Move the Needle

Short list first: risk detection, personalization, smart routing, and fairness analytics — each with clear KPIs to monitor.

Risk detection uses supervised models (transaction labeling + behaviour sequences) to drop manual review rates while keeping fraud losses under control, typically reducing manual reviews by 30–60% within weeks of deployment.

Personalization layers simple collaborative filtering with constraint-based rules to avoid encouraging chasing or problem-play triggers, and that balance is crucial for ethical retention.

Smart routing means choosing the fastest payment path (e.g., e-wallet vs card vs local voucher) for each player in real time, cutting withdrawal latency by 12–48 hours on average.

Fairness analytics give you continuous checks on RNG distributions and provider-level RTP drift so you can spot anomalies before players do — and we’ll show simple verification steps below.

Mini Case: How a Small Casino Cut Withdrawal Time by Half

Here’s a concise example. A regional operator integrated a lightweight ML model that predicted whether a withdrawal would trigger extended KYC based on three inputs: withdrawal amount, deposit method, and ID completeness score.

The model flagged 12% of transactions for manual review, down from 40%, saving both players and staff time and reducing average payout time from 72 hours to 36 hours.

They measured: % flagged, avg payout hours, and dispute rate — each showed clear improvement within a month, proving the value of targeted automation rather than blanket manual checks.

If you want to recreate it, start with a small labelled dataset, a conservative threshold, and rolling human-in-the-loop verification to avoid biased outcomes.

Next, let’s look at the data hygiene and ethical safeguards you need before you deploy anything like this in production.

Data, Privacy and Responsible Use — the Guardrails

Something’s off in many fast rollouts: they skip data governance.

Good governance begins with proven consent flows, clear retention policies, and hashed identifiers for model inputs so you avoid leaking PII into analytics.

For Australian-facing players, ensure you align with the spirit of local privacy expectations even if your licence is offshore — players notice when a site treats their data like currency, and that shows up in churn metrics.

Also, bake in responsible-gaming detectors (session length spikes, deposit frequency anomalies) that throttle offers and prompt voluntary limits; this is both ethical and a long-term retention play.

Next up: tactical playbooks for building and validating each AI component, including sample calculations and KPIs.

Practical Playbooks & Simple Calculations

OBSERVE: “Wait, how do I quantify bonus ROI?” — great question that too many teams ignore.

EXPAND: A straightforward formula: incremental gross revenue = (avg net margin per player × uplift in retention) − cost of offer − expected bonus redemption.

ECHO: For example, a €10 no-wager reload given to 1,000 players that lifts weekly retention by 4% with an average lifetime margin of €25 → uplift = 1,000×0.04×25 = €1,000; cost = 1,000×10 = €10,000; clearly you’d need additional long-term churn reduction or cross-sell to justify it, so model three scenarios (pessimistic/expected/optimistic) before rollout.

If you’re using AI to target offers, always report back on realized uplift and update the model to correct for optimistic attribution.

This raises a key point about model drift and how to monitor it in production — which we cover next.

Monitoring: Drift, Fairness & Operational Alarms

Short rule: if you can’t explain your model’s decisions at the player level, don’t deploy it to critical flows.

Set up monitoring for label distribution drift, feature importance shifts, and business KPIs (false positive rate for transaction blocks, avg payout time, dispute rate).

Simple thresholds work: a 20% shift in flagged rate or a doubling of disputes triggers a rollback and human review.

Make the human reviewer’s feedback feed back into the training set (human-in-the-loop) so your model improves rather than entrenching mistakes.

We’ll now give you two concrete examples of common mistakes and how to fix them fast.

Common Mistakes and How to Avoid Them

Mistake 1: Over-automation of KYC — models that auto-decline create angry players and reputational damage, especially when ID image quality varies by country; the fix is staged automation with immediate manual review options and clear player messaging.

Mistake 2: Personalized offers without guardrails — pushing larger bets to those showing risky patterns increases short-term revenue and long-term losses; the fix is hard constraints on offer size tied to responsible-gaming signals.

Both mistakes are behavioral as much as technical — they happen when product teams pursue KPIs without ethical boundaries — so make ethics part of your KPI dashboard.

Before we move to tool comparisons, here’s a quick checklist you can use right away.

Quick Checklist — Ready-to-Use

- Collect labeled data for withdrawals and manual reviews (30–90 days minimum), and store features separately from PII.

- Start with conservative thresholds and human-in-the-loop verification for 2–4 weeks.

- Monitor 3 core KPIs: avg payout time, manual review rate, and dispute rate; set alert thresholds.

- Implement responsible-gaming detectors and auto-pause offers when signals exceed limits.

- Run fairness checks on RNG and provider-level RTP reports monthly and publish a summary for transparency.

Each of these items can be implemented incrementally, and you should treat them as minimum viable controls before wider AI rollouts.

Comparison Table: Approaches & Tools

| Approach | Strength | Weakness | Best For |

|---|---|---|---|

| Rule-based + human review | Simple, explainable | Scales poorly | Early-stage ops |

| Supervised ML for risk | Reduces manual work fast | Needs labeled data | High-volume withdrawals |

| Recommender + constraints | Personalized UX | Risk of over-personalization | Retention with ethics |

| On-chain provably fair checks | Immutable audit trail | Not mainstream for fiat players | Transparency-focused brands |

Use the table to pick an initial path based on your volume and regulatory posture, and remember that hybrids are often the fastest route to measurable wins.

Where to Try This in the Real World

If you want to experiment without building everything in house, some lighter integrations let you test payout routing and recommender features quickly; if you prefer a hands-on approach, run an A/B test on a small cohort and measure the three KPIs above.

When you’re ready to let real players use the improved flows, make the opt-in clear and provide instant help links for any player who feels uneasy.

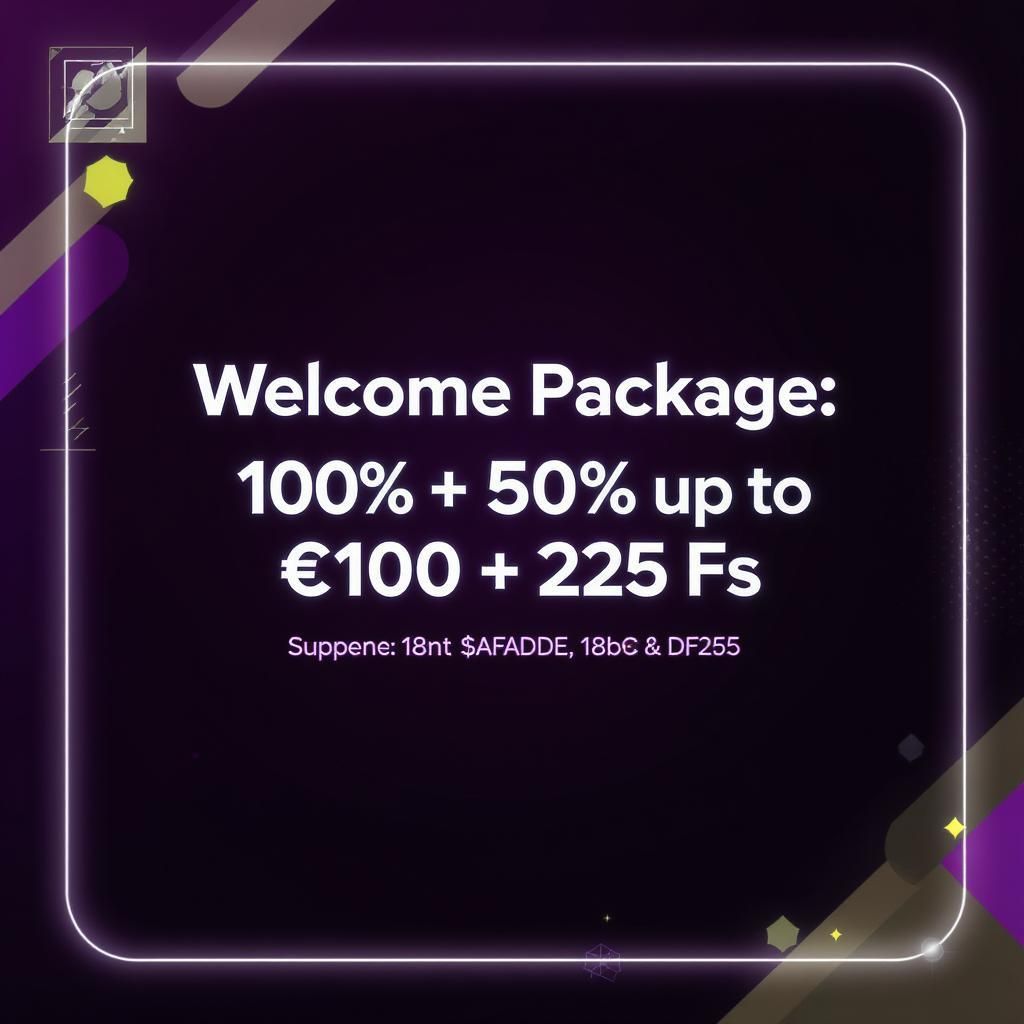

If you’re curious and want a familiar platform to trial, you can also start playing and observe how quicker payout flows and simpler bonuses influence your own behaviour as a player, which is a fast way to learn what matters most to real customers.

The next paragraph points to small operational rules that keep experiments safe and reversible.

Operational Rules for Safe Experiments

Keep experiments limited in scope (≤5% of active users) and duration (≤30 days) until you have strong, positive safety signals like no uptick in disputes or RG flags.

Log every decision the model makes along with the top-3 contributing features so reviewers can replicate and correct errors quickly.

Keep fallback flows: if a model or third-party fails, the system should revert to a conservative rule set and route to human review; this ensures continuity and player trust.

Once these are in place, rollout can be gradual and accountable, which is the exact path smaller operators used to outpace legacy giants.

If you want to test the full experience live as a player, consider taking a measured spin at the platform — try the bonuses and timing yourself through the cash-out process to validate claims in practice, or start playing to gather firsthand insights.

Mini-FAQ

Q: Will AI replace humans in fraud teams?

A: No — AI augments humans by prioritising cases and reducing noise, but you still need experienced reviewers for edge cases and appeals, and you should measure reviewer accuracy as part of your loop.

Q: How do you prevent bias in KYC models?

A: Use stratified training samples, monitor false positive rates across key demographic proxies, and maintain human oversight for auto-reject decisions to correct bias early.

Q: What are minimal RG signals to implement immediately?

A: Session time > 4 hours/day, deposit frequency spike > 200% week-over-week, and repeated failed limit-setting attempts — any of these should trigger a soft pause and a help prompt.

18+ only. Gambling involves risk; outcomes are random and losses can occur. If you feel your gambling is becoming problematic, seek help via local resources such as Gambling Help Online (Australia) or contact support for self-exclusion and limit tools before you continue. This article is informational and not financial advice.

Sources

- Operational learnings from industry post-mortems and public RNG audits (2023–2025).

- Privacy and RG best-practices adapted for AU-facing platforms and offshore licensing frameworks.

About the Author

Experienced product lead with a background in payments and fraud for online gaming platforms, based in Australia. Writes candid, hands-on guides to help small teams adopt practical AI without sacrificing player safety or long-term trust. The guidance above reflects field tests and collaboration with compliance teams across multiple operators.